Handling Docker Volumes Permissions without root privilege

Hello there, Today, I'd want to introduce you to a typical Docker Volumes issue. Sometimes our applications are not Cloud Native as they should be, or they are not as mature as we would like.

This article describes how to mount Docker volumes between the host and container without root privileges. Some open-source projects still require root privileges! However, because this is a HIGH-RISK vulnerability, it should be avoided in production environments. It's a....

Let's start with a simple Node.js HTTP server that writes logs and shares them with hosts via mount docker volume.

Let's setup permissions

Disclaimer! Why not use Filebeat or Logstash to work with logs? I prefer to keep things simple and easy. Node Express Server will be used to list logs as static files, which is very secure! 🤭

Let's start by creating your Docker file to support node applications.

- Dockerfile-node

FROM node:lts-alpine as base

ARG UID=2000

ARG GID=2000

ARG SOURCE

USER root

# adds usermod and groupmod

RUN apk --no-cache add shadow

RUN usermod -u $UID node && groupmod -g $GID node

FROM base as assets

WORKDIR /home/node/app

ADD $SOURCE /home/node/app

RUN mkdir -p /home/node/app/shared/logs

RUN chown -R $UID:$GID /home/node/app

FROM assets as app

EXPOSE 3000

user node

RUN npm install

CMD npm startThis file accepts the following arguments: UID: a user identifier, GID: a group identifier, and SOURCE: the application directory.

We'll create basic Node.js applications, run scripts, and copy javascript files.

mkdir -p shared apps/simple-express

cd apps/simple-express

npm init

npm install --save express morgan rotating-file-stream- apps/simple-express/index.js

const express = require("express")

const fs = require("fs")

const morgan = require("morgan")

const rfs = require("rotating-file-stream")

const path = require("path")

const app = express()

const port = 3000

const logsPath = path.join(__dirname, "shared/logs")

const rfsStream = rfs.createStream(path.join(logsPath, "log.log"), {

size: "10M",

interval: "1d",

})

app.use(morgan("combined", { stream: rfsStream }))

app.use(morgan("combined"))

app.get("/", (_req, res) => {

res.send("Hello World!")

})

app.listen(port, () => {

console.log(`App listening on port ${port}`)

})

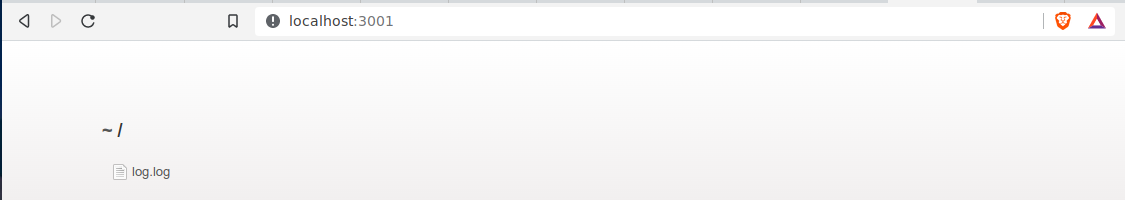

mkdir -p apps/static-server-express

cd apps/static-server-express

npm init

npm install --save express serve-index- apps/static-server-express/index.js

const express = require("express")

const path = require("path")

const app = express()

const serveIndex = require("serve-index")

const port = 3001

const logsPath = path.join(__dirname, "shared/logs")

app.use("/", express.static(logsPath), serveIndex(logsPath, { icons: true }))

app.listen(port, () => {

console.log(`App listening on port ${port}`)

})

Don't forget to sets the script "start" in file both "package.json".

{

"scripts": {

"start": "node index.js"

}

}

Next step, create your docker compose file.

- docker-compose.yml

version: '3.8'

services:

node:

build:

context: ./

dockerfile: Dockerfile-node

args:

SOURCE: "./apps/simple-express"

GID: ${GID}

UID: ${UID}

volumes:

- "./shared:/home/node/app/shared"

environment:

- NODE_ENV=production

ports:

- "3000:3000"

static:

build:

context: ./

dockerfile: Dockerfile-node

args:

SOURCE: "./apps/static-server-express"

GID: ${GID}

UID: ${UID}

volumes:

- "./shared:/home/node/app/shared"

environment:

- NODE_ENV=production

ports:

- "3001:3001"

depends_on:

- nodeObserve the environment variables; the host and container will have the identical user id (UID), group id (GID), and directory path (volume). The Node container will be in responsible of writing logs, while the Static container will list and read files.

Congratulations, you have completed your composition file, let's start containers.

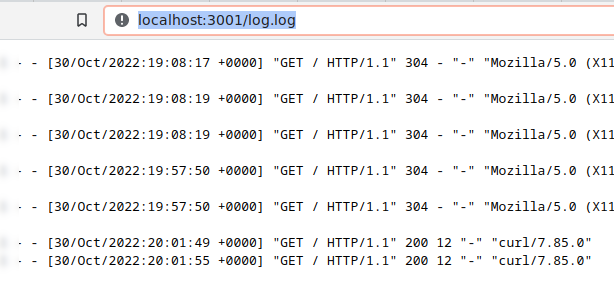

UID=${UID} GID=${GID} docker-compose up -dNow, test several HTTP requests on port 3000 and file reading on port 3001.

curl http://localhost:3000

You can validate file and directory permissions by deleting logs from the host and making new requests.

truncate -s0 ./shared/logs/log.log

curl http://localhost:3000

curl http://localhost:3001/log.logThat's it!

If you prefer, you can clone the repository. https://github.com/williampsena/docker-recipes.

We build some containers that share a volume without root access. Remember that if you want to use a specific user, the UID and GID for the host and containers must be the same.

Your kernel has already been upgraded; see you later.

Time for feedback!